Hey, I wanted to thank everyone who took the time to read my blog in 2010. The stats helper monkeys at WordPress.com mulled over how this blog did in 2010, and here’s a high level summary of its overall blog health:

The Blog-Health-o-Meter™ reads This blog is on fire!.

Crunchy numbers

A Boeing 747-400 passenger jet can hold 416 passengers. This blog was viewed about 5,400 times in 2010. That’s about 13 full 747s.

In 2010, there were 19 new posts, not bad for the first year! There were 49 pictures uploaded, taking up a total of 58mb. That’s about 4 pictures per month.

The busiest day of the year was October 6th with 86 views. The most popular post that day was AMX on an iPad: iRidium vs.TPControl.

Where did they come from?

The top referring sites in 2010 were mocalliance.org, linkedin.com, google.pt, lmodules.com, and z-waveaustralia.com.

Some visitors came searching, mostly for ethernet over coax, niagara 7500, amx ipad, tpcontrol, and tpcontrol price.

Attractions in 2010

These are the posts and pages that got the most views in 2010.

AMX on an iPad: iRidium vs.TPControl July 2010

5 comments

MoCA:Ethernet over Coax for your multimedia devices August 2010

2 comments

Z-wave is better than X-10 June 2010

3 comments

AMX and Homeseer HS2: The ‘Auto’ in Home Automation October 2010

3 comments

Delorean iPod Dock March 2010

Whole-house automation integrates a very wide array of technologies, all of which pretty much fall into two fundamental categories: controllable devices that respond to commands and do something useful, and sensors that monitor, measure, and report conditions.

They work closely together with programmed triggers and events. Triggers are usually a change in device status or a sensor reporting some over-threshold condition. The Event is usually a programmed action that is expected to take place when a Trigger happens. In a relatively simple media room control example, the user presses a Play Movie button on a touchpanel, triggering a series of events that turn on the A/V system (if a sensor notes it is not already ON) , selects the A/V input if needed, dims room lights , starts video playout, etc. The programming to make this happen is straightforward–not a lot of conditional logic– and the complete automation scenario can easily be defined and documented for a programmer to execute.

In a Whole House Automation system there is a much more complex programmed relationship between devices, sensors, and conditions. Data from the same sensor can influence may events, and many sensors can influence the same event. Condition-sensing can extend well beyond whether the system is already powered up or not– temperature, time of day, existing light levels, state of other devices, phase of the moon, etc.–can all directly or indirectly influence what action is to be taken when a trigger happens.

Its inhuman…

Once you plan beyond media room A/V control and lighting, a smaller percentage of events are triggered directly by deliberate human action. This is what automation is…the automatic sensing and reacting to conditions with little or no human interaction. The Humans pre-define what is expected to happen in a given series of events and conditions; the largely unattended Machines do the actual work. Still more complex logic is needed to instruct the controlled devices to do their useful work.

User programmability

Triggers that initiate this automated processing can be a scheduled one-time event or a recurring event like starting the lawn sprinklers at 6:00 AM on alternate days. The triggers can directly cause some action to happen, or one or more Conditions can modify or delay the action. In our Irrigation example, conditions such as Time of Year and the data from moisture sensors or rain gauges can be used to influence the zone watering duration, or whether to water at all. Any device that can change state can be used to trigger automated actions–a doorbell button, a glass-break sensor, a motion sensor, a water-where-water-shouldn’t-be sensor, someone entering a front door lock code, a car entering a driveway, etc. Event triggers can be cascaded (one trigger can result in actions that trigger other events) and can be the result from something that didn’t happen, such as your daughter didn’t arrive home from school between 4:00 and 4:30 PM.

Condition-reporting devices like motion sensors can influence one or many events—a motion sensor on your home security system in a Disarmed state can do double duty to trigger lighting and influence lighting levels and patterns depending on time of day or measured ambient light level. The more automated your home, the more complex the control algorithm.

That lack of direct human interaction is one of the things that makes Automation very complex. Somehow, the Automation control logic has to be programmed to do your bidding. That means a detailed knowledge transfer between you and the automation control equipment. Most people hire a programmer for that; the resulting program gets loaded into the device doing the controlling (usually called a Controller or a Processor). The controller can be a self-contained device, software running on a PC, or part of some other system, like a home security system that also does some home automation duties. You may need only one Controller or a network of Controllers. For now, it’s collectively just The Machine.

The Machine’s role is to be an Event-Action processor. It picks up events triggered by a schedule or by a sensor and programmed action results. In a typical AMX control system the Machine is one or more Netlinx processors interconnected with any of a gazillion different AMX and third-party devices that can sense status and conditions (inputs) and control things in your home (outputs).

The Machine’s Program is usually something your Certified AMX Reseller- agent-person comes up with, after you offer up your extensive list of what all you want to be automated. Whenever you think of something new to do, or wish to add a new Condition to an existing action, you call your programmer back, the program is changed, recompiled, reloaded into your Netlinx controller, and verified functional. Off in the distance, a Bill Customer event is triggered.

All of which works quite well, especially if you know exactly what you want your automated home to do, or are dealing with a very experienced automation designer who can work with you to extract all the intricate details of your Grand Automation Plan and accurately translate your wishes into program code. There are plenty of very good ones out there who do all this quite well.

But there is a problem with that process, in my house at least. I didn’t think of everything up front. I actually planned very little of my automation scheme, I had no clue at all about what it would eventually become. My system is the result of a 28-year evolution. I change my mind frequently as new ideas surface and need some way to make simple, non-programmatic changes.

The DIY Factor

I want to be able to add, modify, and delete events and conditions myself, without having to modify Netlinx code or write code at all, at least for simple event-action logic. The AMX system, with its massive array of hardware devices and software modules, is the best and most comprehensive in the world for sensing conditions, processing events, and controlling devices…but, alas, it (and Crestron and Control4 and about all of the commercial home automation products) do not surrender themselves willingly to user-implemented features and modifications.

So….I had to come up with some dashboard-like , GUI-driven event action processor that could let me create events, assign triggers, and define desired action under various selectable conditions. This new processor would have to communicate and interact with the AMX processor to have access to AMX devices– it would send and receive commands, messages, and events to and from the AMX controller, essentially dividing and sharing the automation task between itself and AMX. Ideally the AMX program would change only when new devices were added; for setting up new events and triggers this other Processor would become the Master.

My requirements: No compiling, no reloads, no program restarts, no PC reboots…create a new named event by filling in text boxes, selecting Conditions from drop-down lists, associate Events and Devices from items in a database, save, and be done. Automation Utopia for the DIY-er.

Alas, no such thing existed or exists today. But there is something that, with a little high-level integration work, comes very, very close: A combination of AMX Netlinx controllers, AMX control devices, and Homeseer HS2 Home automation software ( www.homeseer.com. )

HS2 is a Windows-based automation product that is completely programmable by the user. In my system’s architecture shown to the left (click on it to get a bigger view) AMX manages device input/output for touch panels, IR sensors, IR emitters, some motion sensors, several contact closure inputs, relay outputs (sprinklers, garage door motion), LED message boards, HVAC thermostats, etc.

Homeseer manages all X-10 and Z-wave lighting (see my other posts), some motion sensors, several RF input devices (like the Homelink button on the car’s sun visor), Temperature monitors on the HVAC compressors, a Current Cost energy usage monitor, etc. An old-school two-way RS-232 data link connects the two so the two “brains” can exchange messages and commands with each other.

The division of responsibilities lets me use Homeseer’s most excellent event/action manager and still interact with any AMX gear. The AMX system still has a very complex program–it has extensive decision logic too, done deliberately so it can still do critical things if Homeseer is down or misbehaving. But that logic doesn’t have to be modified just to make a small change or add some simple events and actions.

Homeseer manages all lighting, does all the event logging and reporting, and creates energy usage reports for use by energy management events. It also extracts Caller ID from incoming phone lines (land based and wireless when the mobile phones are docked) and interacts with a Vonage VOIP connection to call out for emergencies and allow remote control of everything from any phone.

In future posts I’ll show how the Homeseer user interface is used to control AMX and other third-party devices and interact with a variety of compatible sensors.

//Mark

A little different posting style this time…less rethoric, just bulleted reasons why I like my AMX Mio Modero R4 handheld remote control. I mentioned it in my previous post and thought I would elaborate on why it is a great handheld control device. Click on the picture at left to see this thing up close or check it out in detail at http://www.amx.com/products/MIOR-4.asp.

As usual this came to me broken and dirt cheap; I can’t justify this stuff new. For the curious I added a bit to the end about what it took to fix it.

Likes and Dislikes

There are some extremely important features I like and a dozen or so less important reasons to appreciate this thing:

- The R4 has a touchscreen that is programmed with graphics and icons like any other AMX touchpanel. It is small, but big enough to be quite useful.

- You can position graphic elements of any sizes that can fit within its resolution, and make a button, slider, or text display out of it. No predefined grid squares or other positioning confines to mess with.

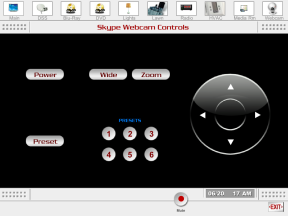

- The R4 is two-way, meaning it can send commands and data, and receive data for display on the touchscreen. I use it to display Caller ID, song titles, security events, HVAC settings and values, Skype call requests, and other feedback.

- R4 graphic elements can have many states and can be animated. 2-way means you can control button state (on, off, colors, image, etc) from the AMX controller, on-the-fly

- The hard buttons –all of them, including channel and volume rockers–are screen-context sensitive. That means buttons can send different codes depending on which screen is visible on the LCD. A hugely valuable feature. For example, the VOL up/down controls the TV volume most of the time, but controls the Bose system when the Bose page is displayed on the LCD.

Other likes:

- It looks like a remote. Visitors will instinctively know what it is, and will expect to use it to control the TV at least.

- Fits well in hand- right width, nice balance

- Rechargeable battery with good run time.

- It wakes up instantly when you pick it up, calling attention to its focus—the LCD touchscreen.

- It has well-placed, pre-labeled buttons for the things that would be common to any A/V control device. No peeling labels, no sending out for engraving, no cryptic, low-res Soft Label icons near the buttons to decipher.

- Screen resolution, color depth, and touch sensitivity are good

- Microprocessor is fast enough to quickly swap page views and smoothly keep up with touch sliders. No annoying screen-repaint delays.

- Base is heavy and heavy-duty. No chasing it around the table when docking the Remote.

- Feels solid. More important than you might think, especially for something that costs this much

- Reliable…doesn’t lose communication with its Zigbee receiver.

- Classy, elegant look in a boxy old-school way. Rectangular buttons, rectangular frame, hard edges and chrome trim and all. Not one of those generic-looking remotes some designer thought should look like a dog bone and everyone else copied. No ‘symphony of ovals’ a la’ 1996 Ford Taurus. Just..square.

Cons:

- One internal part can be a bit fragile (see note) . Don’t drop this thing. Inertia’s a killer.

- Configuration requires access to the AMX TPDesign4 program. AMX makes this virtually impossible for DIYers. Not an issue if you are purchasing this new through dealers.

- Can’t think of anything else. Usually I can, so that says a lot.

I started with a cast-off

Note: Mine was broken, of course—like nearly all of my AMX gear it’s the only way I can justify one. I took the usual chance on fixing it. It had clearly been dropped (slightly flattened upper-right corner); inertia sheared off the ends of an internal mezzanine connector that connects the R4’s Radio/Display/Microprocessor board with the button/wake-up sensor/charging circuit board (I’m guessing on the board functions). This is a very small, very delicate, high-density connector and not really practical to replace without a few megabucks worth of surface-mount repair

equipment and skills I don’t have. Fortunately the tendency on these is to break away the ends that are part of what keeps the mating connectors aligned– the actual gold contact fingers were fine. The remote is well sealed so the tiny broken connector plastic bits were still rattling about inside. Fix was to use tweezers and toothpick to dab a microscopic bit of airplane glue and position the broken-off bits. Let dry a couple of hours, then mix up some epoxy and bead it up around the outside of the connector to bolster the whole connector shell’s strength. Should now be a bit more rugged.

//Mark

With all the chatter about using iPods, iPads and Androids to control the world it has become popular to predict the demise of everything from AMX and Crestron-like dedicated touch panels to the lowly one-hand IR remote controls.

There is no doubt the iPad changed the standards and the price tolerance for control devices. The things are undeniably sleek, attractive, compelling, and cool…and they can do about anything.

But like any device with versatility aplenty to replace multiple gadgets, they, too, have their drawbacks, quirks, and limitations. One of its most severe limitations is something I call the Grab and Use Instantly factor. G&UI is a fundamental consideration in a well-designed home control user experience. If your workflow (yes, I rather hate that over-used word, but I can’t offer something better) requires immediacy, the iPad is not a particularly good choice for a control device.

A proper control design always involves matching workflow with user experience. If you settle in to watch a movie while browsing IMDB for information on the actors, skipping over the agonizing boatload of commercials every 10 minutes and occasionally sidetracked to adjust the thermostat or change lighting levels, well..the iPad and its ilk are a perfect choice for you. Your iPad becomes part of you, clutched and used throughout the entire experience. You will gladly trade the time to load and switch apps for the many conveniences offered by such a multi-talented device.

I do exactly that when I’m lounging around uninterrupted. I love our iPad.

But that isn’t how life in my house works very often. More often, the TV or Internet radio is turned on for background content while our attention is focused elsewhere on something like preparing dinner or yakking to our friends on the phone. Something will momentarily divert our attention to the media- a loud commercial on the TV, a song plays and we want to see who the artist is, etc. We stop what we do, press a button, and immediately resume our original focus.

On the rather rare occasions when my wife and I finally settle down to watch TV the experience is almost always interrupted. The phone rings. Someone comes to the door. These things all call for instant action. In my house the time between the first ring of the phone and the “turn down the TV” plea from the adjacent room can be measured in milliseconds.

Getting an iPad to the point where you can instantly react to tweak the volume or pause the TiVO takes awhile if you weren’t already sitting there, iPad in hand, control app loaded.

Those volume, pause, mute, and Display Artist buttons on the kitchen wall keypad and the lowly one-hand IR remote start looking pretty good when you are in a hurry.

Which is why in our house we have in the Family Room a handheld remote, conventional AMX touchpanel , and an iPad when I haven’t wandered off with it. They all have their place in our workflow. They all have overlapping control subsets, each suited to time, place, and environment.

I like all of these devices whenever their design matches up well with our work flow. The AMX touchpanel is favored as the permanent device on the end table because it is Instant On (no loading apps) and because it has a few Real Buttons that always just work. Top left turns on the TV, Navigation pad instantly changes the channel and volume. No waiting. Its many screens pop up instantly and let me control anything in the house quickly. It has convenient TV channel icons for the family members (me) who can never remember channel numbers. It is rigid and stable; it doesn’t flex or wander around the table when you use it. You can un-dock it and use it with two hands if you are going to do something extensive, or just leave it in its dock and jab at it for something simple. Its dock places it at a useful height, and it can be tilted to any angle and stay there. There’s no ugly and fragile charging cable sticking out of its side. I can programatically control its backlight (bright in daytime, dim at night or when the TV is on with the room darkened) and wake it up and push messages to it (caller ID). And it doesn’t wander off to be used for something else.

In many ways-and those are ways I like— it is nothing like an iPad.

My trusty AMX Mio R-4 has similar virtues. It’s a one-handed control with a real, hard-plastic Volume rocker button that is right where it should be—near your thumb when you grab it, instantly responsive. And it has a channel up/down rocker to surf the local TV channels , like when searching for actual bits of local news between all the car commercials. That’s a lot more convenient than channel icons when the channels are adjacent. It has a rugged and heavy drop-in charging base with power contacts that will never break off. And it always just works–never a pause to wait for anything to show up on it.

And the iPad? I use the IrIdium software I blogged about before (updated version fixed the few remaining graphics issues) to control anything the AMX panel controls. Of course it takes a few seconds to switch apps, and it doesn’t have hard buttons for instant control…but it has a Netflix player and a gazillion apps to toy around with. Sometimes the iPad is my TV and its own remote control. I like it, I understand it sets ta new standard for graphics quality and touch experience, and I know it will cause AMX, Crestron, and other dedicated touchpanel makers to markedly change their thinking and product designs.

I worry about that a bit….afraid that the buying public will trade the purpose-designed features and values of the dedicated touch panel for the general wonderfulness of the iPad and similar devices. When those old dedicated designs disappear we may end up compromising the user experience in ways I’ll probably regret. Much like giving up voice quality and connection reliability for cellular mobility.

Randy Stearns has some interesting thoughts along similar lines. Read them on the CEPro website

http://www.cepro.com/article/randy_stearns_weighs_in_on_ipad_3d_streaming_media/

//Mark

I get more questions about my Home Automation hobby than about all other of my Blog subjects combined–more, lately, than ever before.

I think the increase is a reflection of incredibly fast-paced advancements in technology. And the resultant fascination with home electronics that those technology advancements yield.

Digital Television, 3D television, Internet delivery of video and movies directly to DVD players, iPhones and iPads as do-anything devices–these all conspire to, as one major home automation retailer puts it, “make life more interesting, convenient, and fun”. Who among us doesn’t respond to such compelling stuff?

I’m presented with more questions now because more people are aware of the possibilities of a computerized, automated home. What starts out as a mission to simply consolidate your array of A/V system remote controls in the new Media Room quickly expands to control scene lighting, motorized shades and blinds, and climate controls . Sometimes it stops there. Sometimes the bug bites hard and a quest to automate everything is on.

My own interest in the Automated Home (or Connected Home, or Cyberhome, to offer a few synonyms) started simply enough as a way to turn off lights my toddlers left on. Through sheer luck I’ve become one of those people with a hobby that directly relates to what I do for a living.

My own interest in the Automated Home (or Connected Home, or Cyberhome, to offer a few synonyms) started simply enough as a way to turn off lights my toddlers left on. Through sheer luck I’ve become one of those people with a hobby that directly relates to what I do for a living.

In my professional life I “design” products. Not the hardware and software, just their characteristics, their look, their feel. Sometimes the inspiration for them is my own, far more often the ideas and concepts come from others. They all start with a mission to solve some problem; my job is to imagine how the resultant devices will interact with people. I study the user and their industry. I think about who the user is, their environment, their lifestyle, how they would expect to interact with the device, what features the device would need to expose to fulfill the mission, how expansive or contained it should be, etc. I specify features, I write product specs…than I make products happen, driving and interacting with (and sometimes directly managing) the talented people who actually create such products.

But as I said, I am no longer the guy who writes software or designs hardware professionally. I sometimes miss that direct-from-my-hand feeling of accomplishment…so I go home and write home automation software in my spare time. It’s a creative outlet, a way to not only envision but to execute a design, an idea.

Some of it is actually useful.

For some time now I have focused on developing products for the commercial media compression and distribution industry—products on the source end of what is known to consumers as Internet Streaming, IPTV, Video on an iPhone and other mobile devices. More industrial applications include Digital Signage, conventional broadcast television, and in general anything that takes video where you have it and puts it where you want it.

For some time now I have focused on developing products for the commercial media compression and distribution industry—products on the source end of what is known to consumers as Internet Streaming, IPTV, Video on an iPhone and other mobile devices. More industrial applications include Digital Signage, conventional broadcast television, and in general anything that takes video where you have it and puts it where you want it.

All of which means I get to play professionally with the technologies that also interest me personally and, conversely, use my experiences with home automation to influence my thinking on the design of products that deliver video to the home.

My automation system is essentially a testbed. It does not represent what a consumer would pay a real AMX specialist to automate their new multi-million-dollar home. I have industrial-looking devices that are guaranteed to give any interior designer nightmares. My wife graciously tolerates them, even embraces most of them. Together they represent what is possible, what is practical, and what can be combined to create something that is at the same time interesting, compelling, and convenient.

My automation system is essentially a testbed. It does not represent what a consumer would pay a real AMX specialist to automate their new multi-million-dollar home. I have industrial-looking devices that are guaranteed to give any interior designer nightmares. My wife graciously tolerates them, even embraces most of them. Together they represent what is possible, what is practical, and what can be combined to create something that is at the same time interesting, compelling, and convenient.

Home Automation done well can save money, improve safety and security, help overcome the challenges of physical disabilities, and detect urgent issues such as water leaks in time to prevent disasters. It lets you adapt to unexpected events, like remotely unlocking the front door when your guest arrives early or your son forgot his key. You can remotely adjust the A/C system when you stay at the office later than you expected. It can warn you when events don’t happen, like your daughter did not arrive home from school in the expected time window. It might even save a life..it can call you immediately if the smoke detectors are tripped, if someone unexpectedly opens an outside gate while no one is at home, or if a special emergency unlock code is entered to open the front door.

Now more than ever is a great time to be doing these things. There are more products, more vendors, and more real opportunities for automation at reasonable cost than ever before. It is no longer just the domain of rich guys with mansions and too much money. This is Do It Yourself Mecca, and there is a vast array of devices and techniques and software to choose from to automate anyhing and everything in your home.

I have found it helpful when answering questions privately to serve up bits and details of my own system for illustration. So, in this Home Automation section of my blog, I’ll add some more public detail about what I have found helpful along with some of the not-so-useful things I and others have done. If any of it serves to educate and to make what you do in any way more fun I will have accomplished my goal in this Blog.

I have found it helpful when answering questions privately to serve up bits and details of my own system for illustration. So, in this Home Automation section of my blog, I’ll add some more public detail about what I have found helpful along with some of the not-so-useful things I and others have done. If any of it serves to educate and to make what you do in any way more fun I will have accomplished my goal in this Blog.

The pictures below (and scattered about in various posts) are diagrams of my home system, less some rather comprehensive security-related stuff I should say. Click on any of them so see a larger version. I’ll be explaining each of them in the coming weeks.

//Mark

- AMX and Homeseer share automation duties

Home automation is about interconnecting and automating devices wherever they are throughout your home. Sometimes automation becomes a real challenge to implement–you can’t move the devices, you can’t get wires to them, and you want to control them from several other locations that also don’t have any infrastructure in place to support your efforts.

Unless you designed and built your own home your sprinkler controller is probably mounted in some inconvenient place on a garage wall. Your thermostat is mounted exactly where you would expect it to be for properly sensing air temperature, but not where you would most like to control it . Most likely your TV and other multimedia devices are located wherever the builder chose to run your antenna coax, and your phones, if not cordless, are positioned wherever the same builder chose to place your RJ-11 wall plates. TV goes there, you sit here. End of discussion.

Lighting is one of the most common things to automate. Their switches are at least placed with some attention to convenience for the user, usually where the electrical code (and good practice) dictate. But they were wired, naturally enough, with only what was needed to make them turn on and off lights. You probably don’t have any extra wiring hidden in the walls, waiting to assist you in your quest for automation.

Whole-house automation control systems have to reach out and touch these things while also supporting new controls and user interfaces where you want them. Unless you are prepared to open up walls and add lots of new wiring, you will need to take advantage of every in-place or reasonably-priced signal path you have available—Wireless RF (as in WiFi, ZigBee, and Z-wave), existing AC power wiring, existing telephone wiring, existing cable TV coax, existing doorbell and security system wiring, etc. A comprehensive, highly distributed automation system may use all of these interconnect methods to communicate to all devices and endpoints.

Fortunately there are all kinds of ways to make use of existing communication paths for home automation without degrading their performance or interfering with their original purpose.

This post is about using your existing Cable TV coax to get Ethernet to multimedia devices- your TV, your Netflix-enabled blue-ray player, and your Media PC. I’ll write later about taking over unused pairs in your telephone wiring for other whole-house automation situations.

When wireless won’t do: Ethernet over Coax

One of the rules in the Unwritten Homebuilder’s Code is that no Ethernet-compatible twisted-pair wiring shall terminate anywhere near where any Ethernet-enabled media device will likely be located. Only Coax and no more than one Coax shall terminate in such locations.

The diagram at the top of this post shows one method I used to get wired Ethernet at several locations where I needed the bandwidth and reliability of a wired connection but had only the single Verizon FiOS coax cable feeding the Verizon set-top box.

The technology to do this comes from the Multimedia over Coax Alliance, or MoCA. They developed a way to superimpose IP traffic in Cable TV bandwidth without interfering with normal Cable channelization. If you have Verizon’s FiOS service you already have MoCA technology in use. The Verizon-provided Actiontec MI424-WR router injects IP onto the cable for use by the Verizon- Motorola set-top boxes.

You can extract Ethernet off of the cable for your Internet-enabled media or automation devices. If you have FiOS you need only obtain a MoCA Coax-to-twisted-pair converter and a compatible Cable TV splitter. D-Link, Netgear, and others make such converters, but they are fairly expensive.

The low cost alternative? Get another Actiontec MI424-WR router just like the one Verizon installed. They go for as little as $10 on Ebay. Connect it as shown in Figure 2, turn off its WAN and its DHCP and routing features, and voila…you have four RJ-45 switched Ethernet ports AND an additional WiFi access point with its own SSID (you can turn it off, too, if desired). Nifty and cheap, and you can do this anywhere you have coax and need Ethernet.

I have the four routers shown to get wired Ethernet performance near my family room TV, my media room, the master bedroom, and one of the upstairs bedrooms.

Don’t have FiOS? Your Cable TV coax is not in use? Just locate an additional MI424-WR anywhere you have a co-located coax and Ethernet port. Use a splitter to inject Ethernet onto the coax if it is already in use for Cable TV. MoCA Internet traffic doesn’t seem to bother most conventional analog or digital cable TV systems, but be careful: cable systems that do not isolate incoming programming from house wiring may attenuate or in other ways not react too well to renegade MoCA traffic originating inside a home.

You can learn all you need to know about ethernet-over-coax at the moCA website – http://www.mocalliance.org/

//Mark

(Updated Oct 13 2010) I noted awhile back that the iPad was likely to be a serious contender as a home control device (insert a big Dah! here), outlining several reasons I thought it might cause a little consternation at AMX, Crestron, and other developers of high-end home automation components and systems.

Starting at about $500 each the iPad costs much less than any comparable AMX or Crestron device so it has the potential to displace what I would guess to be a large part of their market.

So I was a bit surprised when AMX not only publically embraced the iPad as an alternative control device, but partnered with an outside software developer to make iPad integration into the Netlinx environment truly seamless. Which, as I have learned from testing that product over the last few days, is exactly what it is.

Perfectly seamless.

Personally I think the iPad will increase both awareness and access to home control technologies and drive a corresponding increase in opportunities for everyone. Rich guys will still buy AMX controllers and panels (and iPads) for their mansions, the rest of us will make do with often lesser but increasingly capable stuff.

Which brings me to the title of this blog. As part of my ‘will work for gadgets’ program I managed to barter a working iPad and use it as a home control device. Research into what I can do with it to interact with my Homeseer-AMX environment led me to three programs–HSTouch for Homeseer (which I have already been using for some time on an iPhone), and two new programs that can directly communicate with an AMX central controller. One of those is offered by AMX; the other is from an independent third party.

The AMX-authorized iPad product is called TPControl from Touch Panel Control Ltd. They offer a free 21-day trial, after which the price is likely to be in the over-$500-per-copy range. That’s complete speculation because even pricing for AMX products seems to be completely inaccessible to us mere mortals. You have to go through an AMX dealer to purchase the software license token, and I have yet to find an AMX dealer excited about quoting or selling me one of anything–my only true complaint about AMX channel practices. On my budget I have about 5 days of enjoyment left before the TPControl screen goes dark forever.

There is an alternative: Russian company iRidium has done something very similar at about $160 US. Their version has been released for awhile and I have been experimenting with their software.

So..how do these two products compare?

The AMX-sanctioned iPHone/iPad program does not have or need its own graphics design environment. When you install the trial package it modifies your existing TPDesign4 program from AMX, adding iPhone and iPad choices to the list of available target devices. You then simply load an existing TP-4 design and use the Save As Different Panel Type feature, selecting the iPad as you would any other AMX panel. If you don’t have a previous design, run TPDesign4, select the iPad as a new design, and start drawing.

Irridum lets you import designs created in TPDesign4 using their Iridium Wizard or, if you prefer, you can create a GUI from scratch using their included GUI development tool. I didn’t use their design tool but it looked to be capable and comprehensive enough.

Getting the graphics design into the iPad

Both programs have a Server component that transfers graphics into the iPad. It is used to load your initial design and again to reload the iPad any time you change your design. Once your iPad is loaded and started, it communicates directly to the Netlinx master via TCP/IP. Both programs use iPad Setup to specify the IP address of the Master, select the desired Netlinx controller port (usually 1319) and specify the panel’s Netlinx address. Incidentally I loaded both Iridium and TPControl on one iPad at the same time , configured both programs with the same touchpanel device address, loaded both using the same TP-4 project, and pointed both to the same Master. They co-exist perfectly; you can run either program and surface exactly the same controls, making an apples-to-apples comparison very easy.

The results

I experienced no design or run-time problems at all with the current versions of both programs. Iridium’s first version had a few issues with complex graphics as you can see from the first picture. Some elements were shifted southeast from underlying components. Notice the top row of icons reproduced fine, it was only the Chameleon graphic elements that were affected. Iridium has since corrected that issue; the version I downloaded and configured in October 2010 looks exactly as it does in the TP-4 design screen. Interestingly, both programs had very slight issues with complex graphics positioning as noted in the picture captions. Nothing too annoying or severe, and likely corrected by now.

Graphics quality from both programs is excellent..no reason not to be, screen resolutions and LCD display quality have taken leaps ahead in recent years and both iPad and all recent AMX touchpanel designs have very high-quality displays. There is no significant difference in graphics quality between TPControl and Iridium.

There are some other similarities and differences. Here’s my thoughts:

1) TPControl app loads faster. Much faster. Using the 21-page design I created for my family room AMX MVP-8400 panel, TPControl took about 2 to maybe 3 seconds, tops. Iridium takes about 14 seconds. I suspect the AMX app is doing some caching to keep graphics elements active somewhere…perhaps iRidium can offer some improvement here over time. [Oct 2010 update: faster, but still several seconds]

Once loaded, both are equally responsive. The Netlinx controller always responded immediately to any touchpad event, just as it does to any wired or Ethernet native AMX panel.

2) I could find no option settings that would cause either app to stay loaded in the iPad while allowing the screen backlight to dim or turn off. So there isn’t the instantaneous touch-and-go experience of a standard AMX panel. That can be a bit annoying if you use the iPad exclusively as your only control device. I would like to use it when watching TV to instantly ride herd on audio level, skip commercials, pause when the phone rings, look at the Caller ID message to decide if I need to answer the phone, etc. Users don’t expect to wait even a second for these instant-response situations. [Update: Perhaps Apple’s upcoming O/S update will allow these programs to stay resident and restore quickly]. On the other hand, I can’t conveniently look up information about the show or actors when using my MVP-8400. For now at least, you can leave the app Active with the backlight on to maintain instant access.

3) There are, of course, no external buttons on the iPad that correspond to those on most AMX panels. That will affect your design; in my test case I loaded a control set that uses the MVP-8400’s external buttons to power on and off the A/V equipment and adjust volume. I had no provision for that in the screen designs. If your current design uses these external buttons you will need to add corresponding on-screen controls into your TPDesign4 designs.

4) Setup effort is about equal. Iridium’s Wizard was a bit difficult to understand and their documentation…well…hasn’t quite caught up to the software quality yet. [Update: better now, but still not as clear as they could be] Took me awhile to figure out the best settings to preserve the TPDesign image quality, aspect ratio, etc. Server operation was better although I hit a small snag understanding why my design changes and revisions weren’t being uploaded. Once I figured out my error all went well and the Iridium server displayed progress information during the iPad transfer that was very clear. No snags of any kind in the software itself just cockpit trouble on my part.

5) Both are locked to a single i-Device. That means you have to pay twice to put something on your iPad and also something on you iPhone. No problem for the typical rich-guy AMX person; a bit disappointing for scroungers and tinkerers like me. Would be nice to have a Family Pack bundle that allows one iPad and two smaller iPhone installs.

3) Iridium has its own graphics design suite, which makes it a good choice for anyone who scrounged a used Netlinx controller and was lucky enough to get a copy of Netlinx Studio (the main AMX control software design environment) when they gave away AMX Control CDs at trade shows. If you don’t have and can’t get TPDesign4, Iridium is for you.

Final thoughts

One of my esteemed colleagues over on the Australian Z-wave board recently described me as one of the fortunate few having access to AMX gear. There are more AMX (and Crestron, Control4, and others) tinkerer-class users out there than one might think, but a great many of them are installers in the business with access to the required AMX development software. Like me they can’t afford a single AMX product at retail, but they can get used equipment at great prices and they have the tools and knowledge to program it all. All the non-touchpanel gear remains as useful and versatile as it was when new so age doesn’t detract from the experience. But these folks have the same problem I have getting good panels with crisp, clear graphics: new-ish AMX panels are too expensive even when used…so we settle for older touchpanels from the secondary market. Great that they are, the older AMX panels don’t have great display quality.

The fairly recent AMX MVP-7500 panels, for example, have noticable object bleed problems. Draw an array of high-contrast objects and you will see vertical and horizontal shadow lines extending to the display edges. Careful brightness and contrast adjustments help. Their later MVP-8400 wireless panels use a much better (and bigger) display and look great…but not many working units show up on the secondary market at all, much less at great prices. As my Australian colleague noted I am lucky…but much of the luck was in getting broken panels and spending hours fixing them.

So, if you want great–stunning, really –graphics and can’t afford recent AMX products, use an iPad. And don’t forget….the current crop of low-cost wireless serial port, IR output, and contact closure devices further blur the cababilities matrix between what most of us can afford and what AMX offers in their incredibly broad portfolio. Consider Homeseer, HSTouch, and any of the many Homeseer-supported devices of every description to design a very comprehensive home automation and media center system.

I’ll write more about Homeseer’s HSTouch on an iPad , and about how to combine AMX and Homeseer HS2 to get the ultimate whole-house automation experience. In the meantime, here’s a preview of my HSTouch Security page on the same iPad.

I have replaced many of my older powerline-based lighting control devices with Z-wave RF (radio) technology. The reasons: Instant responsiveness, better control reliability, and a wider range of device types to handle more applications.

Here’s why

For the past 25 years I have used variations of X-10 powerline carrier technology to control lights and appliances in my home. For many years it was the only consumer technology available that didn’t require rewiring your house—something I did not want to do. Initially branded “BSR X-10” and sold mainly through Sears and Radio Shack, X-10 was essentially the only consumer installed remote control game in town.

X-10 branded products worked well enough but the company did little to advance their technology, at least for their low cost consumer-class devices. Eventually the X-10 patents expired and several companies developed compatible technologies. All were better at noise immunity and rejection, generally improving powerline carrier performance to an acceptable, if not totally reliable, level. Some RF products were developed that used the same signaling format as X-10, making very simple wireless controls possible.

With proper preparation X-10 can be made to be fairly reliable. So, why change to something else?

The main reason is that powerline-carrier technology still requires too much periodic attention. A home automation technology needs to be invisible to the owner. It may be Ok if the initial installation takes some reasonable amount of tweaking and re-tweaking to make everything work. Careful design and up-front planning is pretty much essential to make any automation technology effective and reliable. Once installed it needs to ‘ just work’.

X-10 makes ‘reliable’ difficult to achieve because there are too many external events that can kill operation without user awareness. That’s a direct path to dissatisfaction.

My real-world example is typical. I installed all of the helpful active and passive devices that boost, repeat, bridge, filter, and in any other way “condition” the AC power system to make powerline-carrier signals reliable. Eventually some unexpected, seemingly random noise on the powerline at least temporarily impairs or kills signals.

That noise can be introduced by simply plugging in some new device. Modular power supplies that come with just about every kind of electronic gadget are excellent sources of electrical hash; purchase a new laptop and suddenly half of your X-10 stuff stops working reliably.

There are X-10 noise filters and they do work well…if you know you need one, and if you use them. I purchased a new Dell laptop. Its power brick killed about one fourth of my X-10 devices whenever it was plugged in, whether or not it is actually powering the laptop. A plug-in X-10 AC filter corrected the problem, but I have to remember to take it AND the brick power module everywhere te laptop goes in the house. Sometimes the filter gets left behind.

Perhaps more significantly, there are other technologies that can compete for powerline bandwidth. Ethernet-over-powerline products can cause problems, some wireless baby monitors and a few of the latest generation of power-management and reporting devices can kill X-10. I’ve had cold, rainy days kill X-10 and have no idea why. Even the number of X-10 devices added to your network can help kill X-10 reliability; each one shunts some impedance across the powerline, lowering the overall signal level. Active repeaters can overcome this…but again, you would have to know you need them, they would need to be purchased, strategically placed, etc. More work, more consideration.

The average consumer isn’t going to like that, Yogi.

There are alternatives to powerline-carrier technologies that overcome these limitations. Smarthome’s Insteon is one of them; I used it for awhile very successfully until its manufacturer made continued use of it too much of a problem for me. That story could be another post all its own. Suffice it to say I still use a number of Insteon devices in X-10 compatibility mode, and they work very well. But I will not be purchasing any more Insteon products, ever.

Practical wireless RF alternatives to X-10 (like Z-wave and Zigbee) communicate to the controlled devices via tiny low-power radio transceivers within each device. Some use “mesh network” technology, meaning that the devices can talk to each other as well—they can relay control and status messages to devices that are not in direct range of the central controller. Done well this can be extremely effective and reliable. And, if the technology is an open standard (or at least the licensing options are reasonable), both cost and availability can be reasonable. You might even see a good variety of compatible devices from different manufacturers, along with a little competition to keep pricing even more reasonable. Z-wave has proven to be reliable in my Texas home and peacefully co-exists with me, my family, and all household technology.

let the conversion continue!

//

I mentioned in an earlier post that I am doing some contract work at Ambrado.

Ambrado designs and manufactures products for the Broadcast industry that also have application anywhere you need to transport audio and video across an IP network, a satellite link, or a microwave link.

This is very different from the world of mostly Internet Video streaming I spent the last 9 years in. It has already proven, in my short time here, to be a fascinating and rewarding learning experience. And a cool place to work.

Many Ambrado products use MPEG-2 compression to convert full-frame SDI SD and HD video into a compressed A/V transport stream. MPEG-2 video compression has for years been used in Broadcasting in virtually every aspect of video compression, transmission and distribution. Except live Internet streaming, which uses several competing standards and almost never MPEG-2

Today nearly all stored Broadcast media is processed and saved in MPEG-2 format, and most live backhaul, ingest, and production activity still uses MPEG-2 as the means for transporting high-quality video to key points in the broadcast ecosystem.

MPEG-2 ’s supremacy is being challenged by a relative newcomer: H.264, also known as MPEG-4 part 10. In time, H.264 will likely replace MPEG-2 in all areas of broadcasting. It is already thriving in applications where its principal value — lower bitrates for equal picture quality– is critically important. And it is becoming the defacto compression for Streaming.

You starting to see worlds collide here?

Not so fast…. It would be easy to believe MPEG-2 is going away fast, or at least not being advanced. Not true, and here’s why: It would be incredibly costly to replace any reasonable amount of MPEG-2 infrastructure just to get H.264’s advantages, so broadcasters won’t give up on it any time soon. In fact, they still demand innovation.

MPEG-2’s healthy prognosis a good thing for Ambrado since the same high-performance hardware and innovative video processing algorithms developed for H.264 are just as applicable to MPEG-2 products. Add in other advancements—lower hardware cost, better thermal efficiency, small and lightweight form factor, portability—and you can create a new generation of products that truly advance the state of the art of MPEG-2 encoding, transmission, decoding, and delivery.

That’s exactly what the design team did in our new Ambrado HC-series MPEG-2 encoder and decoder. By focusing development in two key areas–motion vector estimation and output bit rate management—the design team created products that set a new standard for high-quality MPEG-2 video at low bit rates…and with extremely low latency.

To help understand why focused attention to these two elements makes such a difference in our HC-series products, we need to take a quick look at what compression is all about.

Compression Fundamentals

The reason video is compressed is because it consumes and wastes way too much bandwidth if it isn’t. If you think of video as a continuous stream of still snapshots it is easy to visualize that any two successive snapshots taken at a rate of 25 or 30 per second are not likely to differ all that much. Digitizing all of every snapshot would result in mostly identical information being transmitted over and over, particularly when there are no scene changes or fast-moving objects in the successive snapshots.

This would be a horribly inefficient use of bandwidth. Sending first a full frame, then sending only difference information, would consume a lot less bandwidth. That is the basis for video compression today.

There are times when any two sequential frames, even at a rate of 25 or 30 per second, really are completely different (such as at scene change boundaries), or at least so massively different (as in fast-moving full-screen objects) that full encoding and transmission of the whole frame is mandatory. Even some low-motion scenes can cause some visibly subtle but technically massive differences in successive snapshots, such as the reflection of moving waves on water or the almost imperceptible motion of leaves on trees in a slight wind.

Since the whole idea behind compression is to reduce bandwidth by confining the output digital video stream to only what is essential to recreate the original series of snapshots, we can see opportunities to detect differences in sequential frames and send only what is different until the next massively-different frame. The idea is to re-use as much of the previous frame as possible, overlaying only the differences and doing your best to smooth out the places where you predict and insert differences.

Doing this well, in real time, is where the magic lies and opportunities to make significant improvements can be realized.

For this compression process to work, designers have to be very good at figuring out what changes occurred within each frame and (usually) between successive frames. Excellence comes from processing and communicating the changes in such a way that the viewer does not perceive any distortion, compression artifacts, or other impairments.

In non-live applications you can take all the time you need to comprehensively analyze any number of adjacent pixels or pixel groups or differences in nearby frames—you control how often a new frame is introduced from the source tape machine or server so there are no inviolate time restrictions. In live, real-time applications, you have only a fraction of a second to get everything done right before it is time to receive and process the next frame from the live source. And you have to assume that the incoming video content may be very complex (requiring more complex analysis and more CPU cycles) so your designs have to assume worst-case input (frequent changes) and still reliably complete everything in one frame-time.

There has been a great deal of research into how best to analyze one or more frames of video to detect what changed, and just as many ways of drawing conclusions from that data and intentionally modifying the resulting output to minimize the amount of transmitted data with minimal impairment of the video at the final destination. Most techniques involve dividing a frame into a mosaic of individual small blocks of pixels called macroblocks. Comparing corresponding blocks in two successive frames identifies that something did (or did not) change, and the block can be marked for more detailed processing. Comparing corresponding blocks in three or more successive frames can be even more helpful, although that technique forces you to store those frames in first-in-first-out buffer. This adds the disadvantage of latency, which is a measure of how many milliseconds lapse between the time a frame arrives at the Encoder and is completely compressed, transmitted, and reconstituted by the Decoder. Latency is one of those things that everyone strives to make as low as possible without compromising video quality, for reasons we’ll detail later.

There are several challenges that make this motion processing difficult. The first is the aforementioned lack of time– you typically have about one-twenty-fifth to one-thirtieth of a second to process a whole frame, which means you may have to skip analyzing every macroblock and, in effect, do some serious guessing about where motion is likely to be in the frame. Some very clever and sophisticated statistical analysis techniques get applied here. For example, an algorithm may be designed to detect motion in a series of macroblocks by picking one and comparing changes in the surrounding blocks. Properly analyzed, you can predict which other blocks are most likely to be different by looking at the direction of movement. Groups of macroblocks that appear to have little differences can be left largely alone, blocks in the areas where motion was detected are modified and sent on to the decoder to be overlayed and smoothed into corresponding location of the previous or next frame.

The actual practice is, of course, much more complex. There are mathematically sound ways to effect de-noising, de-interlacing, smoothing, etc; all are important in realizing the best possible video quality. One other consideration: Even when successive frames of video bear little real difference you can send difference information only so long before your guesses, mathematics, and other magic can no longer maintain a great picture. At periodic intervals a full frame is forced, just to have a new pristine reference.

So what makes one MPEG-2 compression algorithm better than another, and how can something that is standards-based be different from vendor to vendor and not create interoperability issues?

The MPEG-2 standards define things like macroblock size, color depth (the number of bits available to specify the unique luminance and color of each pixel), and how many of the pixels are sampled for color –the human eye does not require all to be sampled for color, some schemes encode color information in only 1 in 2 or one in 4 pixels horizontally and take similar liberties vertically.

The standards also provide for user-controlled settings for how many full frames (i-frames), predicted (computed) frames, and other frames that make up a Group of Pictures (GOP) are to be created to produce the digital transport stream. And the standards define exactly where and in what order the blocks of video show up—you wouldn’t want to have blocks re-assembled in the wrong position or order.

But…and this is a big but…the manufacturer has a great deal of freedom on exactly how to go about determining what pixel information is put into those blocks. In other words the motion estimation and analysis math can be entirely proprietary. How well the complex mathematical algorithms do their analysis and prediction, ultimately to make informed decisions on what pixels to modify or place in those blocks, is a key factor in achieving outstanding picture quality for any kind of input video– talking head, extreme motion, and anything in between.

The best of these algorithms detect these very characteristics and alter their own computational processes on-the-fly.

Why Processing Horsepower Matters

More processing power gets you more time and CPU cycles to do something truly exotic to optimize the output stream. Remember, in real-time encoding you only have one frame-time to get everything done before time is up and you must move on to the next frame. More CPU = more time to perform complex analysis and optimization routines.

Some of the science behind this optimization is not particularly new; it is the opportunity to apply them in modern super-fast multi-core processors that made their application practical. The recipe each manufacturer uses for such pixel-predicting and manipulation is often a well-guarded secret.

Balancing Picture Quality, Bitrate, and Latency

Alas, this signal processing magic does not alone a great Encoder make. Two more elements enter the picture: Latency and Bitrate. Both have a great deal of impact on what you can reasonably do to get the best possible picture quality under the most adverse conditions.

Let’s tackle Bitrate first. That is the data transmission rate –expresses in bits-per-second–at the output of the Encoder. The bitstream is typically an MPEG-TS (transport stream) delivered in a standards-driven packet structure for DVB-ASI or in any of several IP transport formats.

The transport stream carries all sorts of data –video, audio, multichannel audio, ancillary data– and a fair amount of administrative overhead. Encoders offer settings for specifying the target TS bit rate, which must be set to something compatible with our application and the bandwidth you have available. If you have your own personal 100 Mbit or a 1 Gigabit unchallenged network the TS bit rate can be set very high. Relatively little compression is needed at such high rates so PQ remains high. The processors still have to keep up, so more power is still helpful here too, but your enhancement routines don’t have to be extremely clever.

Drive the available bandwidth down to the 15-20 megabit range (and considerably lower for h.264) and suddenly encoding video while limiting the number of bits you can send per second gets a little dicey. HD content is even more challenging since one frame of HD video can have as much as 16 times more pixels to process than one SD frame. Fancy games are played to make this happen; some manufacturers are better at them than others.

First, you can reduce how many places you sample color information (Chroma) in each frame relative to Brightness (luminance or Luma), as previously noted. The average human is quite tolerant of such shenanigans. You will see the color sampling selection in your encoder and decoder expressed as something like 4:4:4 (all pixels are sampled to get color information), 4:2:2 (some are sampled only for luminance and some for both) and 4:2:0 (even fewer have color information). Details of how this is accomplished may be found in many places on the Internet, suffice it to say that fewer bits you send per macroblock because of this reduced color sampling technique can allow for reduced TS stream data rate with relatively minor compromise in video quality. At least to the untrained eye.

Another trick is to reduce horizontal resolution…just throw away some “columns” of pixels during capture and sampling, encode what remains, and configure the Decoder to estimate and drop in replacements to restore full horizontal resolution. In actual practice some complex math is used to horizontally scale the video, rather than just eliminate parts of rows. This preserves the entire picture (nothing is cut off), but eliminates some of the detail. Done well this harms video quality less than you might imagine. Most encoders offer user controls for setting horizontal downsampling and color sampling options.

At really low data rates the encoder has to result to more drastic measures to reduce the bit rate. One method is to make the macroblocks larger, directly reducing the amount of analysis and difference information transmitted. This risks more visible “blockiness” in the recovered video.

Another option is to reduce the amount of motion estimation and vector processing, which essentially means the video reconstitution process will more frequently use more older, already-transmitted blocks when reconstituting the video. The risk here is that an older block may no longer adequately represent what needs to be displayed in that position– too much has changed since then. This is most noticeable as block outlines on subtle color shade differences. It can result in severe distortion if not done well.

Doing all of this well is another opportunity for a developer to apply some sophisticated design magic to get the best possible compromise of video quality at low data rates

Latency

Broadcasters are always interested in reducing latency– the delay inherent in digital video processing caused by the need to capture and process at least one full frame before releasing a frame for display.

The best processes available for analyzing and compressing a continuous stream of incoming video frames employ something called temporal analysis– compression techniques that analyze various elements of a series of frames to detect differences. The process goes something like this:

- The capture hardware receives the first full frame of video. This may take as much as two frame-times since capture may have started just after the beginning of the first frame, resulting in an incomplete frame that is typically discarded.

- Spatial analysis begins on the first full frame. Spatial analysis analyzes blocks of pixels within a single frame.

- The next full frame is captured. Spatial analysis can begin on that frame, and temporal analysis of the first two frames can begin.

- The third full frame is captured. Spatial analysis can begin on that frame, and temporal analysis of all three frames can begin, potentially including both forward and backward analysis of differences between the second and first frame, and the second and third.

- This first frame is fully encoded and sent

- The encoder calculates differences with information gleaned from all three (or more) initial frames and generates difference date to be overlaid on the already-sent first frame.

All of this means an initial delay of typically 3 to 4 frame-times—the elapsed time between the first full frame capture plus the additional time to process and transmit the video to the far end. The far-end Decoder adds additional delay; it has its own methods of decoding that also involve buffering and mathematical reconstruction. And the data link between the Encoder and Decoder can add noticeable delay as well, especially when using satellite links.

So…what can be done to reduce latency? Since this is real-time encoding we already know that there can be no added, incremental latency once the encode ball gets rolling- we aren’t allowed to drop frames so everything has to be done in one frame-time.

Given that we all solemnly swear never to drop a single frame, the only real option to reduce latency is to reduce the initial delay. This is done largely by turning off “backward” temporal analysis—that is, don’t hold up initial delivery to allow an examination of a series of frames before the first calculated frame information is sent. This limits picture quality optimization options, putting nearly the entire heavy optimization load on spatial analysis.

The Ambrado HC-series Encoder and Decoder

Ultimately, designing a great encoder/decoder product that does everything–handles fast motion without dropping frames, offers great picture quality even at low data rates, and can produce industry-leading picture quality in Low Latency modes –remains an incredibly challenging undertaking.

ViewCast, my former employer, issued a somewhat whimsically titled press release a couple of days ago that calls attention to an interesting application of the Niagara 7500 HD streaming media encoder at Hard Rock’s “The Joint” concert venue in Las Vegas.

ViewCast, my former employer, issued a somewhat whimsically titled press release a couple of days ago that calls attention to an interesting application of the Niagara 7500 HD streaming media encoder at Hard Rock’s “The Joint” concert venue in Las Vegas.

The Hard Rock story is a good one all by itself, so I’ll start with a couple of links to get you to the press release and to the Hard Rock Vegas web site:

- The Joint is Jumping and Streaming with ViewCast’s Niagara 7500 (PR Newswire)

- The Joint at the Hard Rock Hotel- Las Vegas

My Product Engineering team conceptualized, designed and released the ‘7500 while I was still employed at ViewCast and we all had an absolute blast doing it….so I thought this might be as good a time as any to tell some of its development story as I did earlier in this Blog series about the first Niagara Powerstream encoder.

in Episode 1, recall that we had already established the basic 2U form factor and video-screen-left, text-display-right front panel layout in the first Powerstream concept drawings in 2005. At the time Mark Fears incorporated the ViewCast “swoosh” (we never could think of anything better to call it) from the corporate logo as a prominant, defining color separator somewhere in the middle. Those design queues continued on later PowerStream models and we wanted to keep all those elements if possible to maintain something of a family resemblance.

We liked the flat Mylar overlay of the older products well enough, but from the beginning we pressed for an opportunity to do something really creative for our Flagship High-Definition product. Working against us were strong system-level competitive HD products that were starting to appear on the landscape. That “get something on the market” pressure made us consider using a conventional server chassis and introduce HD on something functional but, ultimately, heavily compromised. Fortunately the decision was quickly made to go for something the market would receive enthusiastically….knowing we would not be first to market.

As the green-lighting process continued the following major design goals emerged:

- Preserve the family look and feel

- Design the front panel assembly for easier assembly and maintenance

- Make the front Preview Video display moveable for wide viewing angle and to improve display appearance when the unit is placed anywhere in any rack

- Eliminate the rather costly multilayered front panel overlay with embedded LEDs and metallic dome touch-buttons

- Create a glass-smooth, no-wear, last-forever, always-look-great, high-contrast front panel at minimum cost

- Consider a custom die cast, chrome metal frame surrounding the touch-sensitive glass control panel (appearance and protection)

- Design the front panel and the rest of the unit for 24/7/365 press-on-regardlesss, can’t-kill-this-thing operation

- Make sure users can interact with it using the same UI, extensive remote control interface, and SCX software our customers were already familiar with

- Oh, yes, almost forgot…It had to accept SD or HD-SDI input.

Rick Southerland, the 7500’s project leader and principal system designer, created and captured the great new look and feel (again in Corel Draw), then worked with Marketing to refine the final appearance. It didn’t change much, most of Rick’s beautiful original concept drawings made it all the way through to production. My Osprey team had already developed the Osprey 700eHD capture card which would be the logical choice for a capture device used in any Niagara HD design. The card was designed from the beginning with an Expansion connector, so we set about designing the internal electronics to add AES digital audio and Analog balanced and unbalanced inputs to the 700e base card. Those were the major new hardware and firmware components for the 7500 and the Osprey team went to work designing their first system-level components. It proved to be an exciting, new, and successful experience for them.

That took care of the audio and video capture components….but the front panel presented several new challenges for us. We wanted to make the front panel a separate assembly, which made it a complex execution of sheet metal, glass, and some molded escutcheon parts that all had to fit together with considerable precision. We didn’t have any in-house 3D CAD design capability at the time so we purchased the necessary tools and equipment and brought in a very bright young man who ultimately blew us away with his exceptional talent as a 3D CAD designer. In no time he and Rick had the first prototype drawings for the entire system’s mechanicals, including mold drawings for custom plastic parts.

We reused the basic character-display circuits from previous designs, but since the Video display was to be a true rendering of the captured HD input (scaled as needed to fit the display), the older SD video designs were of no use. Design began on new Display and Rendering logic.

We wanted a tempered glass touch interface experience somewhat like used on some high-end kitchen appliances. That meant we would be developing a technology we had no internal experience with. Or any familiarity.

The first thing to do was find out who in the world did it right, and then find out how. That led us to the ugliest appliance ever produced in the history of kitchen automation, the Jenn-Aire Attrezzi Countertop Blender shown at left. Rick found the first one on eBay and we had the thing strewn out over Lab Bench 1 in no time. Ugly it was, but we had it on good authority it had flawless touch controls that operated no matter who touched it, no matter room temperature, and in the hostile environment created by being only inches away from a strong magnetic field (the motor), strong source of mechanical stress and vibration (the motor), extremely strong EMI / RFI hash of every description and frequency (the motor), and relatively high-voltage / high-current control that just begs for inductive noise coupling (the motor).

We figured if touch control electronics could work reliably in this thing, we couldn’t go wrong using the same technology in our design. Turns out we were right.

With all technology chosen and vetted we started on final designs. Not much to detail from that point except some exceptionally fine work from the hardware design team.

But we also had lots to do in Software. Although the Osprey 700eHD card had been on the market for a couple of years by this time, we had never advanced ViewCast’s SCX streaming control software to support HD. Even more significantly the Osprey 700e HD card was the first Osprey card that could use the same video input and more than one audio input at the same time. Other of our cards had multiple independent inputs, but this card allows the user to input HD-SDI with embedded audio for Output Stream One, and use the same video input with different, external audio for Output Stream 2. In other words the card has a little drop/insert audio mux on it and SCX had no mechanism to control it. That meant a considerably more massive effort was needed than we had ever undertaken in that product to marry up SCX with the 7500. And some new Adaptive Streaming technologies had to be developed as well.

All real challenges that my fabulous Software team pulled it off in time for everything — software, firmware, microcode, and all hardware–to come together just before NAB 2009, about one year ago this week. Some of those guys are back this year introducing ViewCast’s latest streaming encoder products and showing this years’ advancements to the new Ancept-based VMp product. There’s some good stories for future posts about the Ancept software development team and the advancement of that product during 2009. I only led that team about a year before things suddenly changed…barely enough to know ’em. But more than enough to to know how talented they are, too.

Good times.

//Mark